Manifold Learning Techniques for Signal and Visual Processing

Lecture series by Radu HORAUD,

INRIA Grenoble Rhone-Alpes

Spring (March-May) 2013

Manifold Learning Techniques for Signal and Visual Processing Lecture series by Radu HORAUD, INRIA Grenoble Rhone-Alpes Spring (March-May) 2013

|

|

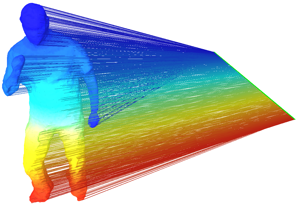

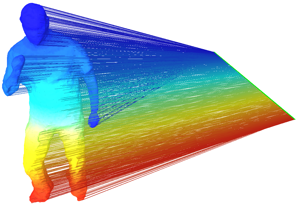

This 3D shape (left) is represented by an undirected weighted graph whose vertices correspond to 3D points and whose edges correspond to the local topology of the shape. Each graph vertex is projected onto the first non-null eigenvector of the graph's Laplacian matrix. This eigenvector (shown as a straight line) may be viewed as the intrinsic principal direction of the shape, invariant to shape deformations such as articulated motion. |

Brief course description |

Time and date |

Location |

Material |

| Lecture #1: Introduction to manifold learning | 10:00-12:00 13/3/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP1.pdf |

| Lecture #2: Symmetric matrices and their properties | 10:00-12:00 20/3/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP2.pdf |

| Lecture #3: Graphs, graph matrices, and spectral embeddings of graphs | 10:00-12:00 3/4/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP3.pdf |

| Lecture #4: Using Laplacian embedding for spectral clustering | 10:00-12:00 10/4/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP4.pdf |

| Lecture #5: Introduction to kernel methods and to kernel PCA | 10:00-12:00 17/4/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP5.pdf |

| Lecture #6: Graph kernels. The heat hernel. | 10:00-12:00 7/5/2013 (Tuesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP6.pdf |

| Lecture #7: Reading group: Wavelets on graphs via spectral graph theory (paper by D. Hammond, P. Vandergheynst, and R. Gribonval, Applied and Computational Harmonic Analysis, 2011) | 10:00-12:00 15/5/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Wavelets on graphs |

| Lecture #8: Gaussian mixtures, expectation-maximization, model-based clustering | 10:00-12:00 22/5/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP8.pdf |

| Lecture #9: Probabilistic PCA and its extensions | 10:00-12:00 29/5/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP9.pdf |

| Lecture #10: Short introduction to Gaussian processes | 10:00-12:00 5/6/2013 (Wednesday) | ENSE3 room D1121 (salle Chartreuse), GIPSA Lab |

Horaud-MLSVP10.pdf |

Brief overview of spectral and graph-based methods, such as principal component analysis (PCA), multi dimensional scaling (MDS), ISOMAP, LLE, Laplacian embedding, etc.

Further readings: L. Saul et al. Spectral Methods for Dimensionality Reduction. O. Chapelle, B. Schoelkopf, and A. Zien (eds.), Semisupervised Learning, pages 293-308. MIT Press: Cambridge, MA.

Eigenvalues and eigenvectors. Practical computation for dense ans sparse matrices. Covariance matrix. Gram matrix.

What is the matrix of a graph? Properties of graph matrices. Spectral graph theory. Undirected weighted graphs and Markov chains. Graph distances. Graph construction.

Spectral clustering. Semi-supervised spectral clustering. Links with other methods: Normalized cuts and random walks on graphs. Image and shape segmentation

Properties of kernels. Kernel PCA.

The univariate and multivariate Gaussian distributions. The Gaussian mixture model. The expectaction-maximization algorithm for Gaussian mixtures. The curse of dimensionality.

EM algorithm for PPCA. Bayesian PCA and model selection. Factor analysis.

Lecture #10: Gaussian processes

A short introduction to Gaussian processes for regression, lecture entirely based on Bishop's book.