Data

Jump to: Video calibration data - Fixed perceiver - Panning perceiver - Moving perceiver

The following page presents the data available in the database.

Here you can find a description of the sequences, preview videos (reachable by clicking on the sequences image), and you can download the data.

Important: In order to download the data, you must have JavaScript enabled, and accept cookies

For more information about the content of the different datas, you may want to have a look at the documentation page.

List of recorded sequences

| sequence name | duration min:sec | type of head | number of speaker(s) | speaker(s)/noise behaviour | visual occlusion | auditory overlap | |

|---|---|---|---|---|---|---|---|

| fixed perceiver | TTOS1 CT1OS1 CT2OS3 CT3OS1 NTOS2 TTMS3 CTMS3 DCMS3 NTMS2 CPP1 M1 |

00:20 00:18 00:21 00:19 00:33 00:23 00:25 00:48 00:26 02:40 03:47 |

dummy dummy dummy dummy dummy dummy dummy dummy dummy dummy dummy |

1 1 1 (changing appearance) 2 (one at a time) 1 3 to 4 1 to 3 2 to 4 2 several 5 |

moving moving moving moving moving moving moving moving moving seated seated |

yes no no no yes - L yes yes yes yes - L yes yes (2 not seen) |

no no no no no – M/N/C yes yes yes no – M/N/C yes yes |

| panning perceiver | VHS1 VHN1 ELMS3 ELSS1 ELSN1 |

00:34 00:32 00:43 00:41 00:53 |

dummy dummy dummy dummy dummy |

1 1 1 2 2 |

fixed fixed moving fixed fixed |

yes no yes yes no |

no no no - N yes yes |

| moving perceiver | AH2 Ming2 M3 Circ1 AHN1 AHN4 P1 |

00:38 01:44 03:54 01:00 00:44 00:56 01:17 |

human human human human human human human |

1 several 5 5 1 1 5 |

moving moving seated seated moving moving seated |

yes yes yes yes yes no yes |

no yes yes no no - N no no |

Visual occlusion means either (i) an occlusion of a speaker by another speaker or by a wall, or (ii) a speaker outside of the field of view while speaking. In the column “auditory overlap” and “visual occlusion”, the tags mean [M]usic, [C]licks, white [N]oise and [L]ight changes.

Fixed perceiver scenarios use the dummy head wearing the helmet, panning perceiver senarios use the dummy head on swivel chair and moving scenarios are recorded with a human wearing the helmet and in-ear microphones.

Scenario schematics

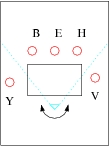

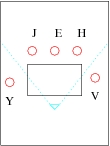

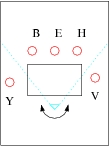

The scenarios are illustrated by a schematic.

- Lines indicate actor and perceiver 2D trajectories in the room.

- A full line indicates “speaking while walking”.

- A dashed line mean “quiet while walking”.

- When fixed, the field of view is drawn in blue.

- Actors are shown as circles, and stationary sound sources as triangles.

- The rectangle represents an occluding wall.

- The tags mean [M]usic, [C]licks, [L]ight changes.

Part 0: Video calibration data

Several calibration sequences have been acquired with the goal to get accurate results. We provide the best two.

First calibration downloads: |

Second calibration downloads: |

|

Part 1: fixed perceiver

The aim for the fixed perceiver scenarios is to enable evaluation of audio, video and AV tracking and clustering in scenarios with various challenges such as speakers walking in and out of field of view, walking behind a wall, speakers changing appearance and multiple, simultaneous sound sources. These are covered by the following scenarios.

1. Scenarii with one speaker

1.1. Tracking test - One speaker (TTOS)

- Participants: One speaker.

- Development: One speaker, walking while speaking continuously though the whole scene. The speaker moves in front of the camera and passes behind. He reappears from the right, and turns to the cameras.

TTOS1 |

||

|---|---|---|

|

||

Downloads: |

1.2. Clustering test - One speaker (CT1OS)

- Participants: One speaker.

- Development: One speaker, walking. The speaker moves while speaking in front of the camera and passes behind it from the left. As soon as he gets out of the field of view, the actor become silent. Only on reappearing from the right, does he start speaking again and turns to the cameras.

CT1OS1 |

||

|---|---|---|

|

||

Downloads: |

1.3. Clustering test 2 - One speaker (CT2OS)

- Participants: One speaker.

- Development:Same scenario as CT1OS again with one walking speaker. The main distinction is that, when reappearing, the actor has changed appearance (taken off jacket, put on glasses).

CT2OS3 |

||

|---|---|---|

|

||

Downloads: |

1.4. Clustering test 3 - One speaker (CT30S)

- Participants: One speaker - Two actors.

- Development: Two actors, only one seen and heard at a time. The first speaker moves toward the camera then disappears from the field of view and stops talking. The second speaker enters the field of view while speaking and faces the cameras.

CT3OS1 |

||

|---|---|---|

|

||

Downloads: |

1.5. Noise test - one speaker (NTOS)

- Participants: One speaker, music source (M), wall, additional light source (L), clicker-chirper (C).

- Development: One speaker, walking. The actor walks behind a wall and returns to his initial position, always speaking. Various audio noises like clicks and music are present. The lighting condition is intentionally modified.

NTOS2 |

||

|---|---|---|

|

||

Downloads: |

2. Scenarii with multiple speakers

2.1. Tracking test - Multipe speakers (TTMS)

- Participants: Four speakers.

- Development: A more complex tracking scenario than the single speaker TTOS. Four actors are initially in the scene. As they start speaking (and go on speaking throughout the test), they move around; one person exits the scene, walks behind the camera while talking, and reappears.

TTMS3 |

||

|---|---|---|

|

||

Downloads: |

2.2. Clustering test - Multiple speakers (CTMS)

- Participants: Four speakers.

- Development: A more complex clustering test scenario than the single speaker CTOS. Here four actors are initially in the scene. As they start speaking and moving around, two people exit the scene, stop talking, reappear and start talking again.

CTMS3 |

||

|---|---|---|

|

||

Downloads: |

2.3. Dynamic changes - Multiple speakers (DCMS)

- Participants: Five speakers.

- Development: Five actors in total. Initially there are two speakers, then a third joins, one leaves, and later on a fifth joins. Then another two leaves. All actors speak while in the scene and move around.

DCMS3 |

||

|---|---|---|

|

||

Downloads: |

2.4. Noise test - multiple speakers (NTMS)

- Participants: Two speakers, music source (M), wall, additional light source (L), clicker-chirper (C).

- Development: Similar to the one speaker noise test, NTOS. Two speakers are talking, occasionally walking behind a screen.Meanwhile music and clicks are heard in the background.

NTMS2 |

||

|---|---|---|

|

||

Downloads: |

Part 2: varying head movement of the perceiver

The panning perceiver scenarios were recorded to obtain recordings of controlled cues from an actively moving head. They are all recorded using the dummy head and torso strapped onto a swivel chair. During recordings, the chair is panned from side to side at the same time as the scenario is “acted” out.

3. Varying head - noise (VHN), ans speech (VHS)

- Participants: Dummy on swirvel chair.

- Development: A single speaker static while speaking, or a speaker playing white noise, is standing at 0° azimuth. The perceiver starts facing the sound source, and then is moving with periodic left-right movements. The purpose of this sequence is to measure the effects of the perceiver's head movements on binaural cues for localisation.

VHN1 |

VHS1 |

|

|---|---|---|

|

|

|

Downloads: |

Downloads: |

4. Ego location - stationary noise (ELSN) and stationary speech (ELSS)

- Participants: Dummy on swirvel chair. Various noise and speech placed at different locations around the room.

- Development: Two loud speakers playing white noise or speaking people are positioned at -15° and 45° azimuth respectively. The dummy is being moved in a “random” fashion from side to side. This scenario provides interesting data for investigating to what degree it is possible to do ego-location using audio, video and AV cures.

ELSN1 |

ELSS1 |

|

|---|---|---|

|

|

|

Downloads: |

Downloads: |

5. Ego location - moving speech (ELMS)

- Participants: Dummy on swirvel chair.

- Development: One moving speaker is walking in the scene and may disappear from the field of view. Two additional static sound sources playing white noise are placed at -15° and 45° azimuth. The perceiver is static during the first part of the sequence and then is panning randomly. The purpose of this sequence is to study whether the head movement can be inferred from binaural dynamics, and to highlight complementarity between audio and video modalities.

ELMS3 |

||

|---|---|---|

|

||

Downloads: |

6. Active hearing (AH) - only speech, speech + noise, only noise

- Participants: Blindfolded, seated (on swirwel chair) listener is asked to stay facing toward moving target speaker. Speaker talks while slowly moving around room following an unpredictable pattern.

- Development:

- AH: One speaker talking while slowly moving around room following an unpredictable pattern. Blindfolded, seated on a swivel chair, the listener is asked to stay facing towards the moving speaker. The research question that may be addressed with this sequence concerns a behavioural study, i.e. how does the perceiver move in order to track the sound source?

- AHN: The same scenario as AH, with one moving speaker. An additional static sound source produces stationary noise. The research purpose is similar to the previous scenario, here in a challenging noisy environment.

AH2 |

AHN1 |

AHN4 |

|---|---|---|

|

|

|

Downloads: |

Downloads: |

Downloads: |

Part 3: moving perceiver

The aim of the scenarios with a moving perceiver is to provide very challenging audio-visual situations that can appear in a real-life environment data.

7. Cocktail party - passive (CPP)

- Participants: Seven speakers.

- Development: 7 actors in total, 6 in scene and one to the left of the fixed perceiver. Two groups of conversation (one immediately in front of and one further away from the dummy head) are formed. People are seated and generally not moving a lot. At some point one speaker from the furthest away group gets up and joins the conversation of the front group. This setup makes for a very challenging auditory and visual scene.

CPP1 |

||

|---|---|---|

|

||

Downloads: |

8. Mingling (Ming)

- Participants: Perceiver is moving around/mingling in cocktail party situation.

- Development: Small groups of people chatting are placed around the person wearing the acquisition helmet. He turns around to join in different conversations through the scenario. This is a very challenging scene with lots of people talking from all directions and with people moving in and out of field of view.

Ming2 |

||

|---|---|---|

|

||

Downloads: |

9. Meeting (M)

M3

M1

- Participants: Five speakers.

- Development:

- M1: Five actors are seated around a table, three are visible to the fixed perceiver (dummy head); one is to the left and one is to the right of the dummy. Initially all join into the same conversation and later on two subgroups of conversations are formed.

- M3: Five speakers are sitting around a table having a conversation with normal turn taking. The sequence starts out with each speaker in turn saying their name and affiliation. The perceiver person is instructed to move naturally in terms of who to face during the conversation.

10. Panel (P) and Circle (Circ)

Circ1

P1

- Participants: Seated (on swirwel chair) listener is asked to stay facing towards person speaker. Speakers sit around table “meeting-style” and have a conversation taking turns. The listener is asked to look at dominant speaker. Speech is non-informational.

- Development:

- P1: This scenario is to mimic a person listening into a conversation with non-overlapping speech and looking at the person speaking. Five people are positioned around a table and taking it in turn to count out loud.

- Circ1: Several speakers positioned in a circle around the perceiver. Each person takes it in turn to speak and the person wearing the acquisition helmet attempts to look at them as soon as possible after they've started speaking.

P1 |

Circ1 |

|

|---|---|---|

|

|

|

Downloads: |

Downloads: |