Experimental Setup and Data Acquisition

Since the purpose of the Ravel datas set is to provide data for benchmarking methods and techniques solving HRI challenges, two requisites on the aquisition setup are mandatory: a robocentric collection of accurate data and a reallistic recording environment.

The environment

All the sequences were recorded in a regular meeting room. Whilst two diffuse lights were included in the setup to provide for good illumination, no devices were used to modify neither the illumination changes nor the sound characteristics of the room. Hence, the recordings are affected by exterior illumination changes, acoustic reverberations, outside noise, and all kind of audio and visual interferences and artifacts present in natural indoor scenes.

The recording device

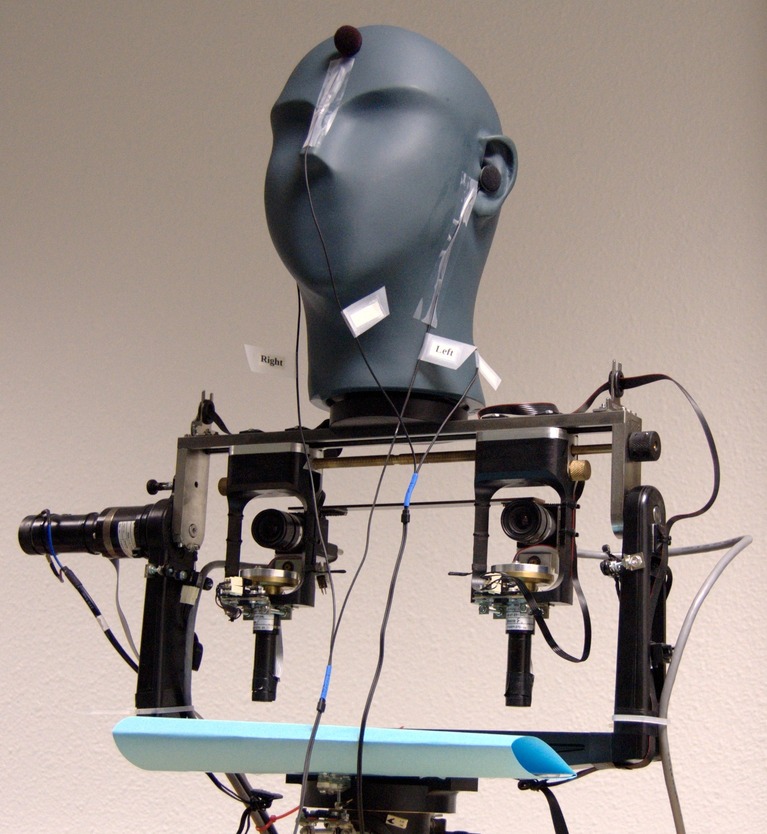

The POPEYE robot head is the audio-visual recording device used to acquire the Ravel data set. A pair of color cameras as well as the front and left microphones are shown in the picture. Four rotational degrees of freedom are available.

The recording device was designed by the University of Coimbra in the framework of the POP European project . Named POPEYE, this robot is equipped with four microphones and two cameras providing for audio and visual sensorial faculties. The four microphones were mounted on a dummy-head, as shown in next figure, designed to imitate the filtering properties associated with a real human head. Both cameras and the dummy head were mounted on a four-motor structure that provides for accurate moving capabilities: pan motion, tilt motion and camera vergence. The POPEYE robot has several remarkable properties. First of all, since the device is alike the human being, it is possible to carry out psycho-physical studies using the data acquired with the device. Secondly, the use of the dummy head and the four microphones, allows for the comparison between using two microphones and the Head Related Transfer Function (HRTF) against using four microphones without HRTF. Also, the stability and accuracy of the motors ensure the repeatability of the experiments. Finally, the use of cameras and microphones gives to the POPEYE robot head audio-visual sensorial capabilities in one device that geometrically links all six sensors.

The acquired data

For each recorded sequence, we acquired several streams of data distributed in two groups: the primary data and the secondary data. While the first group is the data acquired using the POPEYE robot’s sensors, the second group was acquired by means of devices external to the robot. The primary data consists of the audio and video streams captured using POPEYE. Both, left and right, cameras have a resolution of 1024 by 768 and two operating modes: 8-bit gray-scale images at 30 FPS or 16-bit YUV-color images at 15 FPS. The four Soundman OKM II Classic Solo microphones mounted on the Sennheiser MKE 2002 dummy-head were linked to the computer via the Behringer ADA8000 Ultragain Pro-8 digital external sound card sampling at 48 kHz. The secondary data are mean to ease the task of manual annotation for ground-truth. These consist of one flock of birds stream (by Ascension technology) to provide the absolute position of the actor in the scene and up to four microphone wireless devices PYLE PRO PDWM4400 to capture the audio track of each actor.

Device calibration and synchronization

Both cameras were synchronized by an external trigger controlled by software. The audio-visual synchronization was done by means of a clapping device. This device provides an event that is sharp -- and hence, easy to detect -- in both audio and video signals. Regarding the visual calibration, the state-of-the-art method described in [1] uses several images-pairs to provide an accurate calibration. The audio-visual calibration is manually done by annotating the position of the microphones with respect to the cyclopean coordinate frame [2]. We could also apply the procedure described in [3] to refine these positions.

References

- J.-Y. Bouguet. Camera calibration toolbox for Matlab, 2008. URL.

- M. Hansard and R. P. Horaud. Cyclopean geometry of binocular vision. JOSA, 25(9):2357-2369, September 2008.

- V. Khalidov, F. Forbes, and R. P. Horaud. Calibration of a binocular-binaural sensor using a moving audio-visual object. Technical Report RR-XXXX, INRIA, March 2011. Submitted for journal publication.

The Ravel Corpora is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.